The term "feminism" refers to the belief in the social, political, and economic equality of the sexes. At its core, feminism is about empowering women to live their lives freely and without ...

Read more

Sex toys for women have evolved significantly over the years, offering a diverse Read more

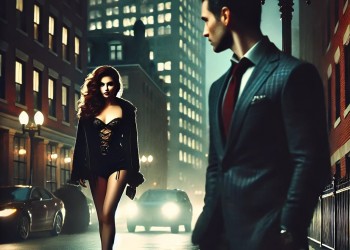

In today’s digital age, the escorting industry is more competitive than ever. Read more

In today’s fast-paced world, many aspects of life feel rushed, and unfortu Read more

As we navigate through 2024, the relationship between sex workers and politics i Read more

Cancellations and no shows are an inevitable part of working in the escort indus Read more

The U.S. escort industry is constantly evolving, shaped by a variety of social, Read more

In the bustling city of Los Angeles, where the lights never dim and the noise ne Read more

In the heart of a quiet, unassuming town in the Midwest, far removed from the bu Read more

In the heart of Boston, where historic cobblestone streets mingled with sleek, m Read more

Q:Do you have a list of clients that you see regularly that you have formed a sp Read more

Q:Do you have a list of clients that you see regularly that you have formed a sp Read more

Q:If a client decided to make you a present, what would you be happy to receive? Read more